Blog

The OCaml Planet RSS

Articles and videos contributed by both experts, companies and passionate developers from the OCaml community. From in-depth technical articles, project highlights, community news, or insights into Open Source projects, the OCaml Planet RSS feed aggregator has something for everyone.

Want your Blog Posts or Videos to Show Here?

To contribute a blog post, or add your RSS feed, check out the Contributing Guide on GitHub.

`if-then-else` expressions. Textbook: https://cs3110.github.io/textbook

`if-then-else` expressions. Textbook: https://cs3110.github.io/textbook

Expressions and values; the OCaml toplevel, utop. Textbook: https://cs3110.github.io/textbook

Expressions and values; the OCaml toplevel, utop. Textbook: https://cs3110.github.io/textbook

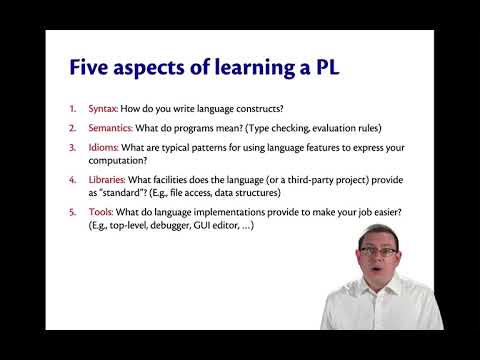

Syntax, semantics, idioms, libraries, tools. Textbook: https://cs3110.github.io/textbook

Syntax, semantics, idioms, libraries, tools. Textbook: https://cs3110.github.io/textbook

More about anonymous functions, aka lambdas. Textbook: https://cs3110.github.io/textbook

More about anonymous functions, aka lambdas. Textbook: https://cs3110.github.io/textbook

Unnamed function values. Textbook: https://cs3110.github.io/textbook

Unnamed function values. Textbook: https://cs3110.github.io/textbook